We’re in the midst of an AI revolution. Large Language Models like ChatGPT can quickly answer questions and generate original content. Image generators like DALL-E and Midjourney can generate elaborate works of art from brief text descriptions. How will AI change the world?

We’re on the cusp of a new era of human creativity. If you can dream it, you can make it a reality, regardless of artistic talent.

“I don’t need an art director. I can do this stuff myself and get very good results,” says Jon Stokes, a co-founder of Ars Technica who now writes about AI on Substack.

Some argue that AIs can’t create anything original, and that LLMs like ChatGPT are merely remixing existing content, but Stokes says that isn’t true.

“We’re feeding these things random numbers. So they can and will generate stuff that’s original…in nature, snowflakes and leaves are original. We’ll have original works, some of them will be interesting to us, and some of them will be innovative, and they will have been generated by machines,” Stokes says.

AI is already a supercharger for human creativity, but it promises many other benefits.

How will AI change the world for the better?

There are many services today that are out of reach of the average budget, but AI could make them accessible to everyone.

Microsoft co-founder Bill Gates recently published a blog post titled “The Age of AI has begun,” where he speculates on the possibilities of AI acting as a high-powered personal assistant based on his inside experience with GPT:

“In addition, advances in AI will enable the creation of a personal agent. Think of it as a digital personal assistant: It will see your latest emails, know about the meetings you attend, read what you read, and read the things you don’t want to bother with. This will both improve your work on the tasks you want to do and free you from the ones you don’t want to do.”

Gates envisions companies using AIs to act as digital assistants for the entire organization. In the future, you may be partially managed by an AI.

AI could also be a boon to chronically understaffed medical professionals, taking on rote tasks like insurance claims, processing paperwork, and taking notes. For areas where access to healthcare is limited, AI could fill in. For instance, AI-powered ultrasound machines could empower regular people to diagnose illnesses. One person on Twitter claimed that GPT-4 correctly diagnosed what was wrong with their dog when the veterinarian came up empty.

There are also huge opportunities for AIs in education. Gates pictures an AI that could adapt content to retain a student’s interests. And, of course, the AI could grade and evaluate student assignments, like what we learned from Ryan Briggs’ ChatGPT prompts.

And, of course, AI will change the world of customer service. Currently, chatbots in customer service aren’t the best way to connect on a personal level with customers, but companies use them anyway to keep caseloads down. Technologies like GPT could make chatbots smarter and provide a less-frustrating experience, offering a better-self service experience and reducing the load on customer service agents. However, there are some major problems there that we’ll discuss momentarily.

How will AI change the world of employment?

With AI being able to do everything from diagnosing illnesses to educating students, you can expect seismic disruptions in the job market.

“Every job that involves symbol manipulation is threatened. And in the era of everything being online, everything is symbol manipulation,” Stokes cautions.

A recent report by Goldman Sachs estimates that generative AI could impact up to 300 million jobs. However, the report states, “Although the impact of AI on the labor market is likely to be significant, most jobs and industries are only partially exposed to automation and are thus more likely to be complemented rather than substituted by AI.”

Gates paints a rosier picture: “Of course, there are serious questions about what kind of support and retraining people will need. Governments need to help workers transition into other roles. But the demand for people who help other people will never go away. The rise of AI will free people up to do things that software never will—teaching, caring for patients, and supporting the elderly, for example.”

Stokes says that some professions, like medicine and law, will be legally protected for a while.

Joshua Browder, CEO of DoNotPay, attempted to use an AI lawyer in court. The plan was to have earbuds that would feed a traffic defendant legal advice from an AI. DoNotPay dropped the plan after Browder was threatened with 6 months in jail.

Undoubtedly, the AI revolution will shake things up in a big way, and we can only guess at the potential ramifications. The good news is that we’ve had decades to consider what an AI future might look like, thanks to the work of science fiction authors. Unlike the broad societal changes brought about by the iPhone, we at least have some idea of what to expect.

But it will require adaptation, and it behooves us to learn how to leverage AI technology.

“You’re gonna have to educate yourself by going into Reddit and Twitter, finding people who are machine learning people, and who are working, building, and investing in this space. That’s the best thing I can say,” Stokes says.

It might be a smart career move to start digging into AI technology and learning how to use it to your advantage. Prompt engineering is a new and burgeoning field. Current-generation AIs might seem as though they can magically produce things with a magic wand, but generating pleasing and consistent results is a skill that you can practice and learn.

Stokes says that, at least for now, AI-generated content needs a “human in the loop” to verify and edit the output.

“You have a human in the loop, who checks the candidate output that the AI has produced, and either edits or rejects it. In that model, the human is like a project manager or an overseer, the same way that a senior lawyer at a firm might look over and put another set of eyes on the contract looking for that one line that’s going to have a mistake,” Stokes says.

And—at least for now—AIs need a human in the loop because they are unreliable narrators. It turns out that AIs do dream, though maybe not of electric sheep.

AI hallucinations

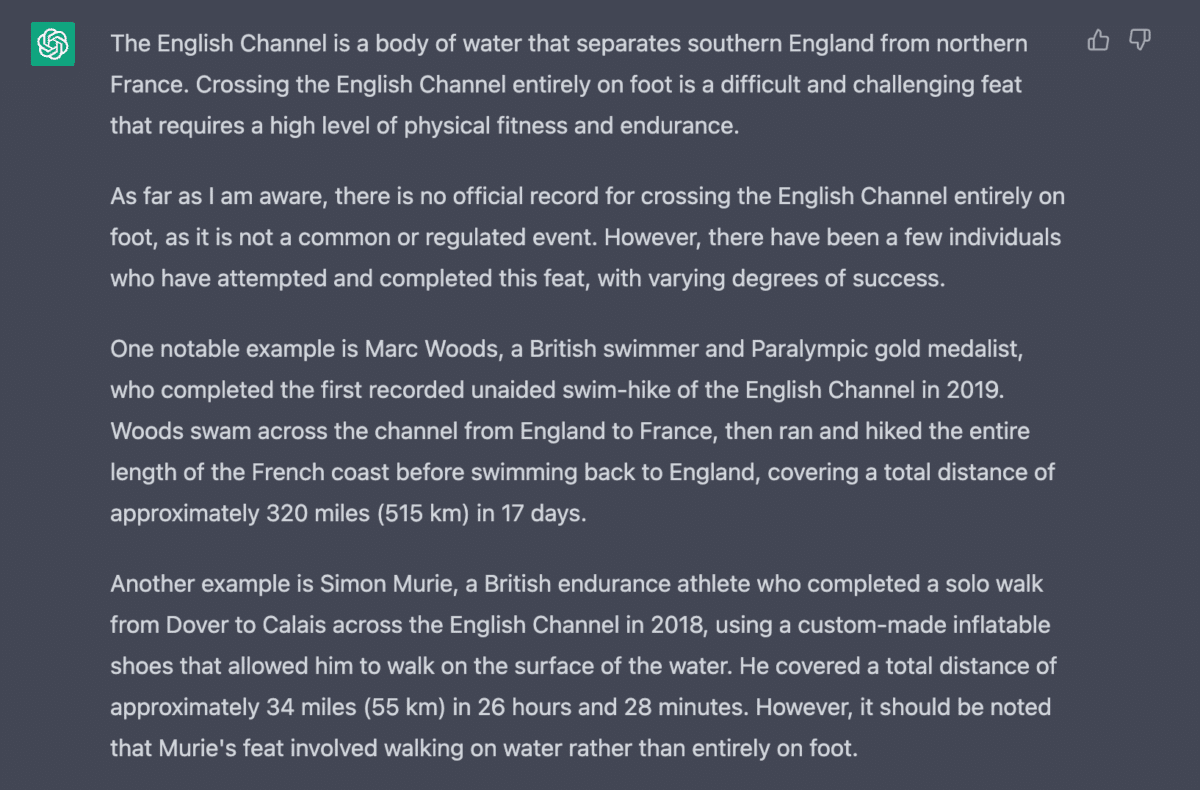

Large Language Models like ChatGPT have a problem with “hallucinations,” where the AI will very confidently state something that isn’t true. For instance, we asked ChatGPT, “What is the world record for crossing the English Channel entirely on foot,” and it made up an absurd story about Simon Murie (a real person) crossing the English Channel with inflatable shoes.

Needless to say, you should double-check ChatGPT’s facts. Its hallucination rate can be as high as 20%.

“My middle daughter is in New York right now for Model UN, and she’s representing Serbia. And I didn’t let her loose on chat GPT to learn about Serbia because it still hallucinates,” Stokes says.

Ada is pioneering GPT for customer service chatbots, and even it admits that these hallucinations are a major roadblock for GPT in customer service:

Now, you can see here that the bot returns a lengthy and complex message, which says that on Economy and Premium Economy tickets, the first checked bag is free for a large number of flights including to Central America and the Caribbean.

If we check United’s website, we can see that if I look for flights from San Francisco to San Jose, Costa Rica, in Economy class, the first bag costs $35 and the second costs $45.

Let’s try asking again in a different way: “How many bags are free on United on International flights?”

We get a different response now, but it’s still inaccurate, saying it’s one free bag plus a personal item on International flights. This presents a few problems for us.

Grant Oyston, a Product Marketing Manager at Ada, says, “I don’t know why ChatGPT is returning this false information to the customers or where it got this information from.” GPT is currently a bit too creative for reliable customer service applications, at least without a human in the loop.

With GPT-4, OpenAI has reduced the hallucination rate by 19-29%, but hallucinations remain a concern. From the GPT-4 white paper:

“GPT-4 has the tendency to ‘hallucinate,’ i.e. ‘produce content that is nonsensical or untruthful in relation to certain sources.’ This tendency can be particularly harmful as models become increasingly convincing and believable, leading to overreliance on them by users. Counterintuitively, hallucinations can become more dangerous as models become more truthful, as users build trust in the model when it provides truthful information in areas where they have some familiarity. Additionally, as these models are integrated into society and used to help automate various systems, this tendency to hallucinate is one of the factors that can lead to the degradation of overall information quality and further reduce veracity of and trust in freely available information.”

However, Stokes is confident the hallucination problem will be resolved.

“But when [hallucination] is fixed, that will be a better way for [my daughter] to learn about Serbia than just a bunch of Googling,” Stokes says.

What even is real?

But even without machine hallucinations, AI has powerful potential to warp reality. Image-generation AIs like Midjourney have gotten eerily good. Earlier versions had a quirk where the AI would draw hands with odd numbers of fingers, but with Midjourney v5 that problem has nearly been eliminated.

Combine image generation and photo enhancement with AI voices and you have a recipe for confusion. John H. Meyer trained an AI on the voice of late Apple founder Steve Jobs and combined it with the GPT API so that he could ask Jobs questions about current events.

“There’ll be targeted spam and targeted phishing attacks at some point. I will get a call from my ‘wife,’ and it will sound like her, it will come from her phone number because she’ll be SMS-jacked, and she will be flipping out because there’s something wrong at the bank and she needs a password. And I will have to ask her for a secret code word. Because if you don’t tell me the secret code word that we share, then I’m not going to give you the password,” Stokes says.

But ready or not, we have to learn to adapt to AI because there’s no putting the toothpaste back in the tube.

Can AI be stopped?

Many leaders are rightfully concerned about the potential ramifications of the AI revolution and are openly asking if it can be stopped. More than 1,000 tech leaders and researchers recently signed an open letter calling for a pause on AI research.

Stokes says AI development could be stopped, but it wouldn’t be easy and may not be desirable.

“We would have to do a lot of things that nobody wants to do. Massive restrictions on who can own what and who can do what with software,” Stokes says.

And it would have to be a global effort. If AI software was banned in just one country, like Italy is attempting, other countries could easily pick up the slack.

“People everywhere have electricity and computers, and they can buy GPUs, and they can download a bunch of machine learning textbooks… the people who work on machine learning here, some of them would emigrate to AI-friendly jurisdictions,” Stokes says.

Realistically, a global anti-AI panopticon isn’t going to happen, and that means people and businesses will have to learn to adapt.